Summary/Key Takeaways:

- Use VEED Fabric 1.0 when starting from an image and voice.

- Use Lip Sync API to remap lips in an existing video.

- Always prep your inputs: clean audio, clear visuals, and well-tagged emotion cues.

- Post-process smartly: review, segment, subtitle, and brand your outputs.

- Think scalable: Use APIs to automate lip sync in production workflows.

Syncing an artificial voiceover to lip movements in a video is tedious, error-prone, and often feels impossible to get just right. Even a single-frame mismatch between the video’s lip movements and the voiceover can break immersion. Fixing those frames manually in post-production takes hours. For marketers, creators, and businesses producing explainers, localized content, or AI avatars, this slows everything down. It eats up time, budget, and creative momentum.

Luckily, AI now handles lip-syncing automatically, producing accurate, expressive results in minutes rather than days. In this guide, we'll show you how to create AI lip-sync videos using VEED's most powerful model, Fabric 1.0, which turns a single image and a voice input into a full talking-head video.

We'll walk you through lip-syncing step by step, share tips to avoid common pitfalls, and introduce two alternative tools —VEED's Lip Sync API and D-ID —that work well for dubbing or fast avatar animation.

What is AI lip syncing?

AI lip-syncing uses artificial intelligence to automatically match spoken audio, often from an artificial voiceover, with a person’s lip movements in a video. This allows voiceovers and visuals to be perfectly aligned without manual editing or animation.

Here's how AI lip syncing works:

- Converts speech audio into visemes (visual representations of phonemes)

- Maps these visemes to frame-level lip movement in real-time or pre-rendered footage

- Works with avatars, static images, or pre-recorded video

How to lip sync videos with AI using VEED Fabric 1.0

The most effective way to create a talking-head video with synchronized lip movement is to use VEED Fabric 1.0. This AI lip-sync video generator lets you create custom avatars from uploaded images and voice recordings, then bring them to life.

Unlike stock avatar tools, Fabric gives you complete creative control over character style, emotion, and presentation.

Here are the tools you’ll need to start lip-syncing videos with AI:

- VEED account with Fabric 1.0 access

- A clear description of your character (or a photo to upload)

- Dialogue input (typed text, uploaded audio, or recorded voice)

Here's how to create a lip-sync AI video in 5 simple steps.

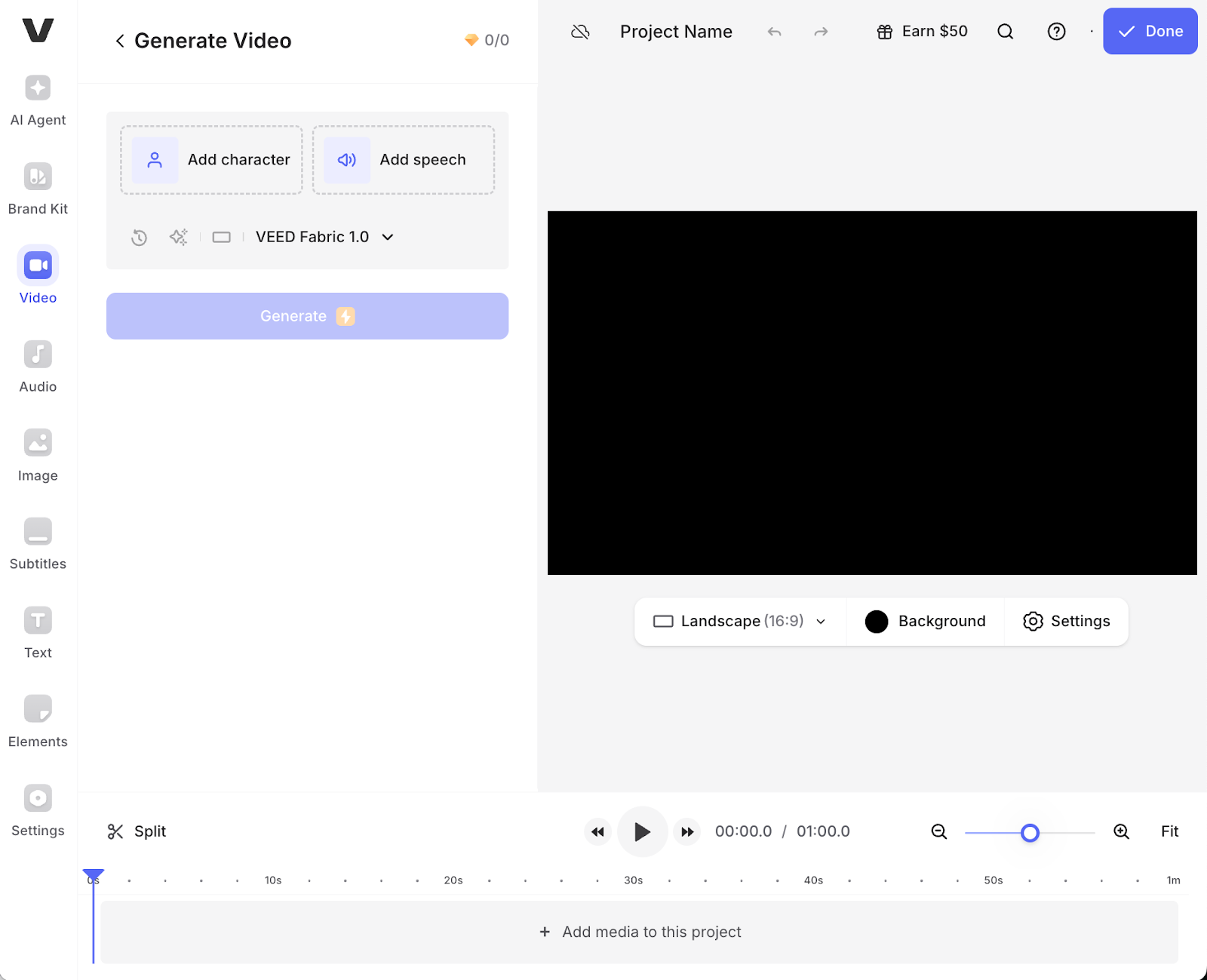

Step 1: Open VEED’s AI Playground and select Fabric 1.0

Open Fabric 1.0 from VEED. This tool lets you create lip-synced talking-head videos featuring your chosen character.

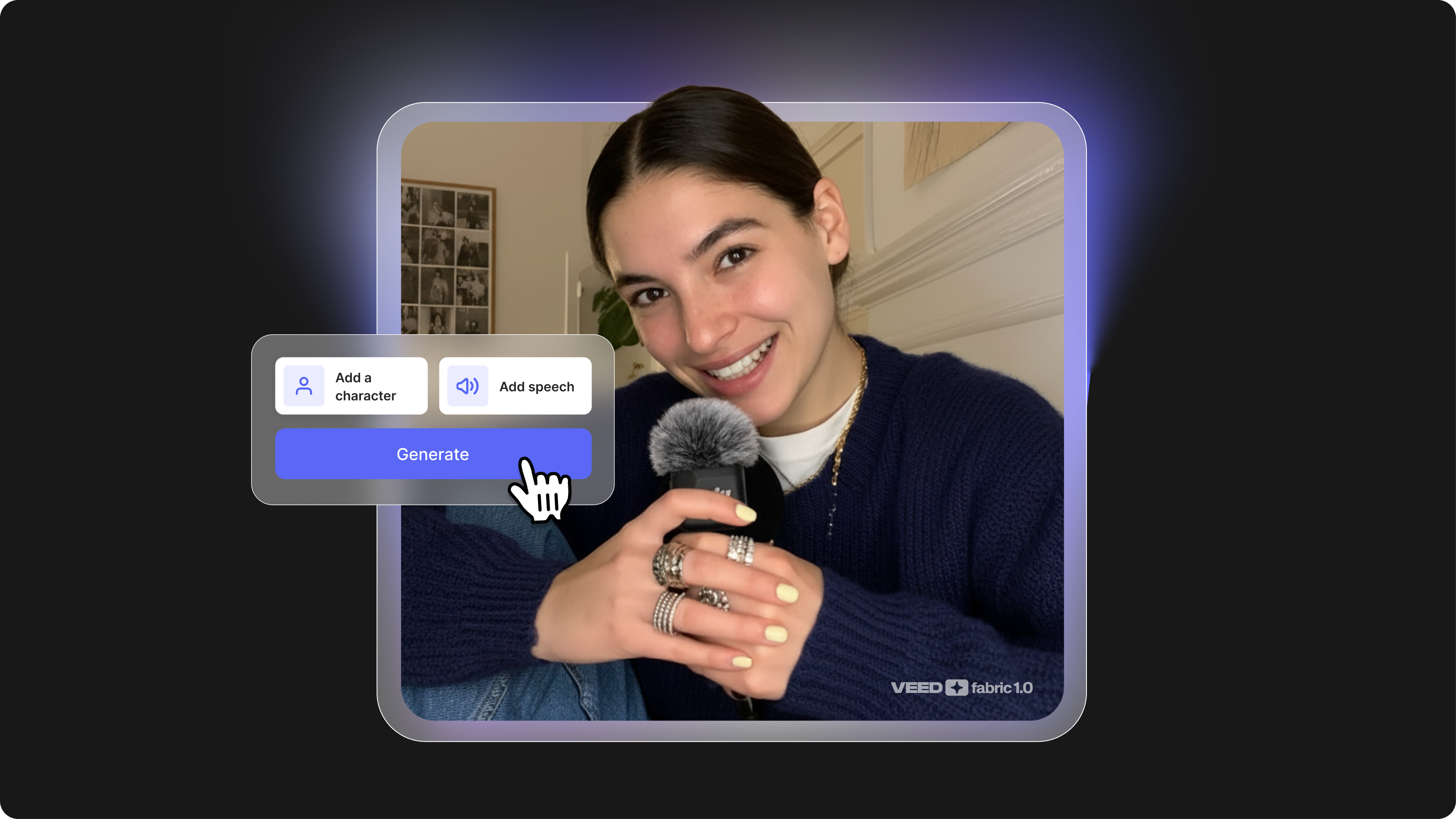

Step 2: Add your character

Upload an image of the character you want to animate (this could be a real person, artwork, or even a brand mascot).

Step 3: Add your speech

Next, upload your audio file. This could be a voice recording, podcast clip, or narration.

Step 4: Choose your desired aspect ratio and resolution

Select your desired aspect ratio and resolution. VEED supports all major formats optimized for different platforms:

- Instagram Stories and Reels: Vertical 9:16

- LinkedIn and Facebook: Square 1:1 or landscape 16:9

- TikTok: Vertical 9:16

- YouTube: Landscape 16:9

Step 5: Generate the AI lip sync video

Click "Generate" to create your talking video.

Fabric 1.0 synchronizes the character's lip movements with your audio or selected voiceover. The AI analyzes your audio's phonemes and maps them to realistic mouth shapes frame by frame.

Generation typically takes 5-10 minutes, depending on content length and complexity. Longer videos or highly detailed character styles may take slightly more time.

Step 6: Download or edit it further in the VEED editor

Once your video is generated, you can download it and share it. If you want to edit it further, you can import it into VEED's editor and add some final touches like:

- Captions or subtitles to boost engagement (80% of social video is watched without sound)

- Transitions between scenes for multi-section videos

- Background music to enhance emotional impact

- Branding elements like logos, color overlays, or text animations

VEED's editor is built for speed. Everything happens in your browser, so there’s no need for downloads or any complex software to learn.

Common challenges when learning how to lip sync

- Vague or overly complex prompts can reduce avatar quality. Keep descriptions specific, focusing on 2-3 key characteristics.

- Monotone audio affects expressiveness. If your voiceover sounds flat, your avatar will too. Add emotion and energy to your delivery.

- Style mismatches happen when the voice and visuals don't align. A serious, corporate voice paired with a cartoonish avatar feels jarring. Match tone across both elements.

Timeline expectations

Expect 5-10 minutes to generate, depending on content length and complexity. Simple avatars with short scripts generate faster; detailed characters with more extended dialogue take longer.

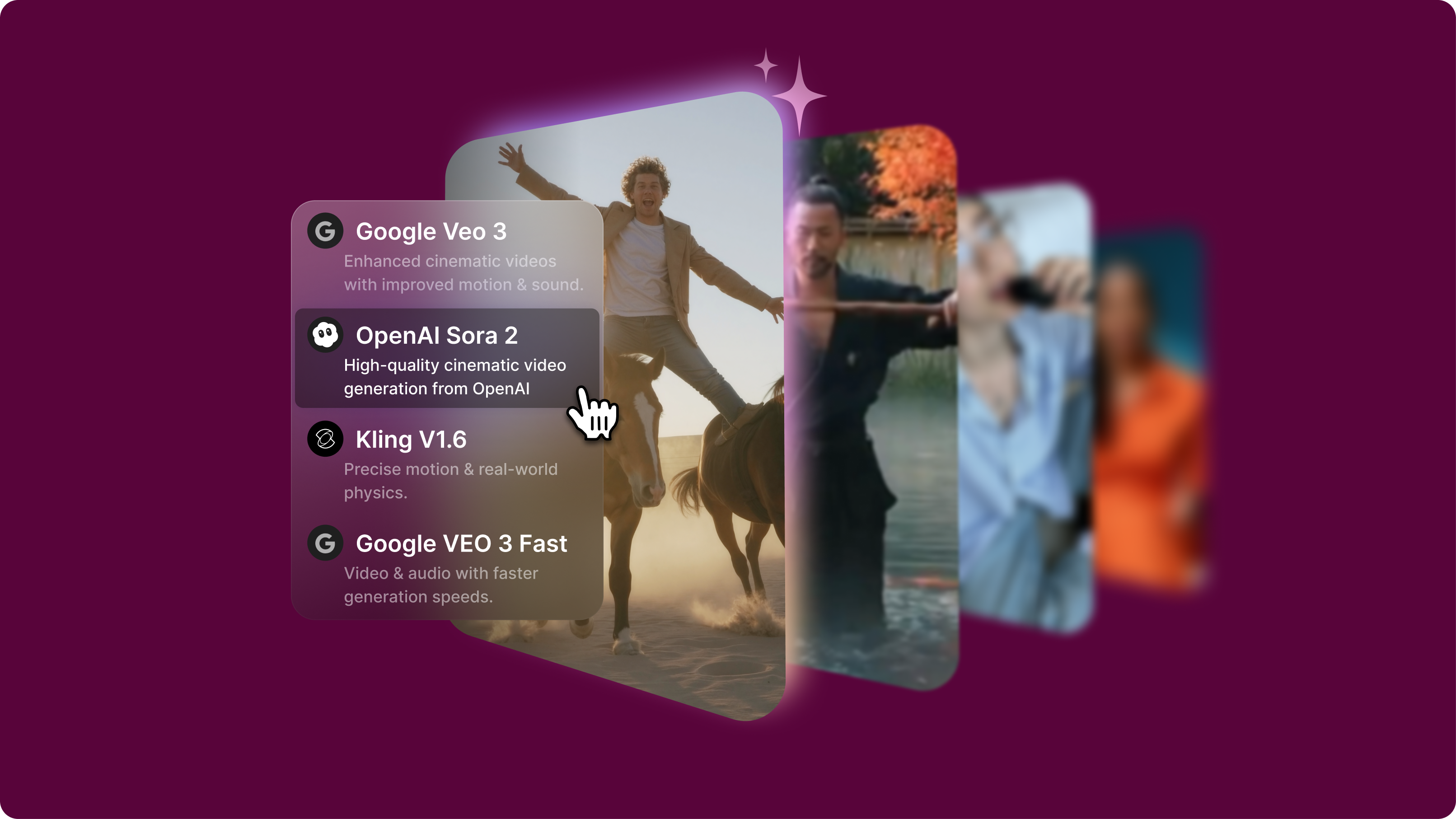

Best tools for lip-syncing videos with AI

The top 3 approaches to AI-powered lip syncing include VEED Fabric 1.0, VEED Lip Sync API, and D-ID. These tools differ in whether they generate videos from static images, modify existing video footage, or serve as complete avatar solutions.

We tested each platform based on:

- Input types supported (image, video, audio)

- Output quality and realism

- API accessibility and workflow integration

- Speed and scalability

- Use case alignment (marketing, dubbing, personalization)

1. VEED Fabric 1.0

Fabric 1.0 is VEED's AI lip-sync video model, designed to create longer, more expressive talking-head videos directly from your input character and voice recordings. VEED also lets users generate entirely custom characters in different styles, including realistic, claymation, and anime. It's a versatile, creator-friendly tool built specifically for social media storytelling and branding.

Use cases:

- Build brand spokespersons or animated explainers from scratch

- Run A/B tests on avatar styles for different platforms

- Generate TikTok, LinkedIn, or Instagram videos with personalized presenters

Pros:

- Custom character generation with no need for pre-set avatars. Create unique spokespersons that match your brand identity.

- Supports style transfer and emotional expression. Make your avatar happy, serious, enthusiastic, or contemplative based on your content needs.

- Seamlessly integrated with VEED's video editor for full workflow support. Generate, edit, caption, and export without switching tools.

Cons:

- Limited free usage in some regions (India, Pakistan, Bangladesh). Check availability in your area.

Best for: Marketers, educators, and creators seeking fast, flexible talking content with complete creative control

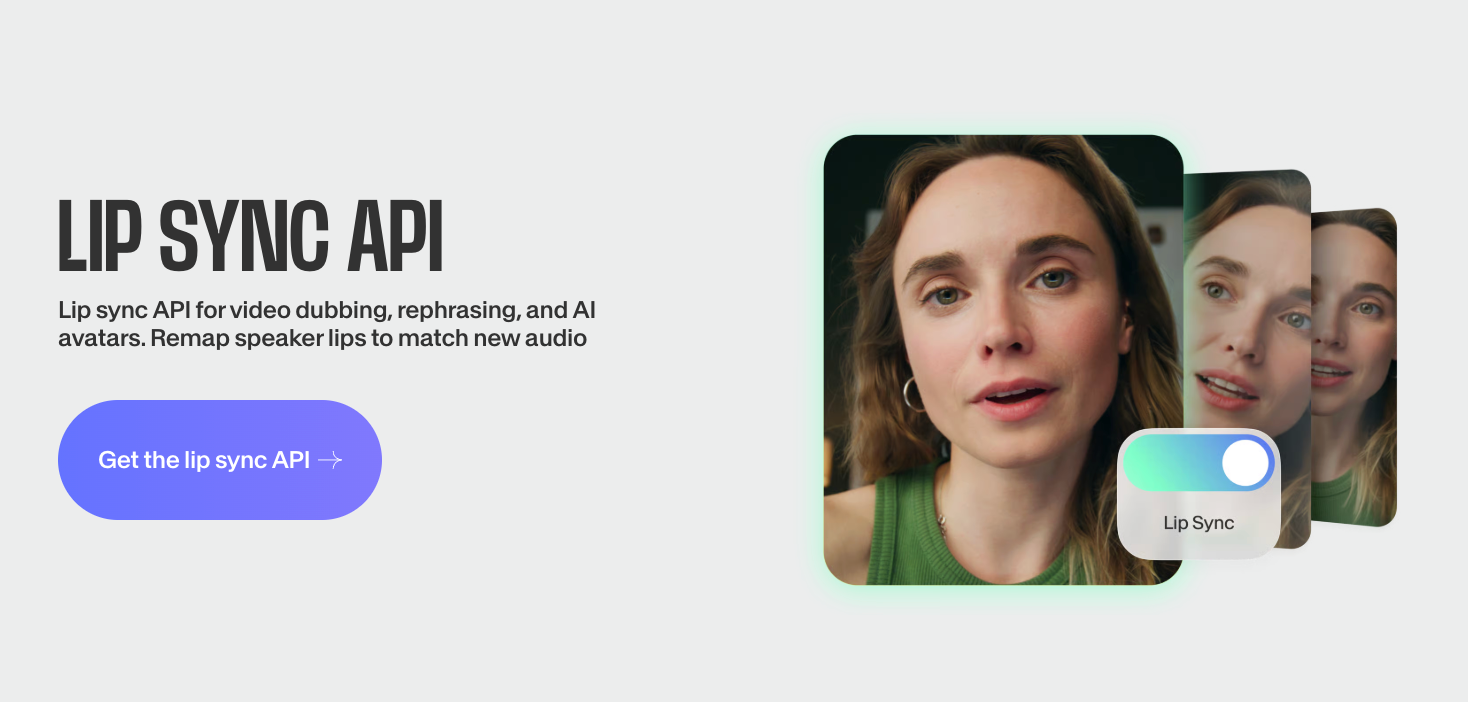

2. VEED Lip Sync API

VEED’s Lip Sync API is a developer-ready tool designed to automatically remap a speaker’s lips to match new audio in existing video footage. Ideal for dubbing, localization, video rephrasing, or creating dynamic AI avatars, it delivers natural lip movements synced precisely to speech. With just two inputs (video and audio), you get back a fully synced MP4 ready for publishing.

Use cases:

- Localize keynote talks and educational videos across languages

- Refresh old video ads with updated audio messaging without reshooting

- Deploy dynamic customer service avatars that respond in real time

- Auto lip sync large video libraries for multilingual markets

Pros:

- Lightning-fast processing at approximately 2-2.5 minutes per video minute. Scale to hundreds of videos without bottlenecks.

- Affordable at $0.40 per minute with no hidden fees. Transparent pricing makes budgeting simple.

- Handles multiple aspect ratios and video formats. Works seamlessly with landscape, portrait, and square videos.

- No training or complex setup required. Upload your files, hit sync, and download results.

Cons:

- Limited to audio replacement. Not designed for generating avatars from scratch—only for syncing existing footage.

- Requires clean input footage for best accuracy. Low-quality video or poor lighting can reduce sync quality.

Best for: Product teams, localization services, and developers looking to scale high-volume lip-synced content through API integration.

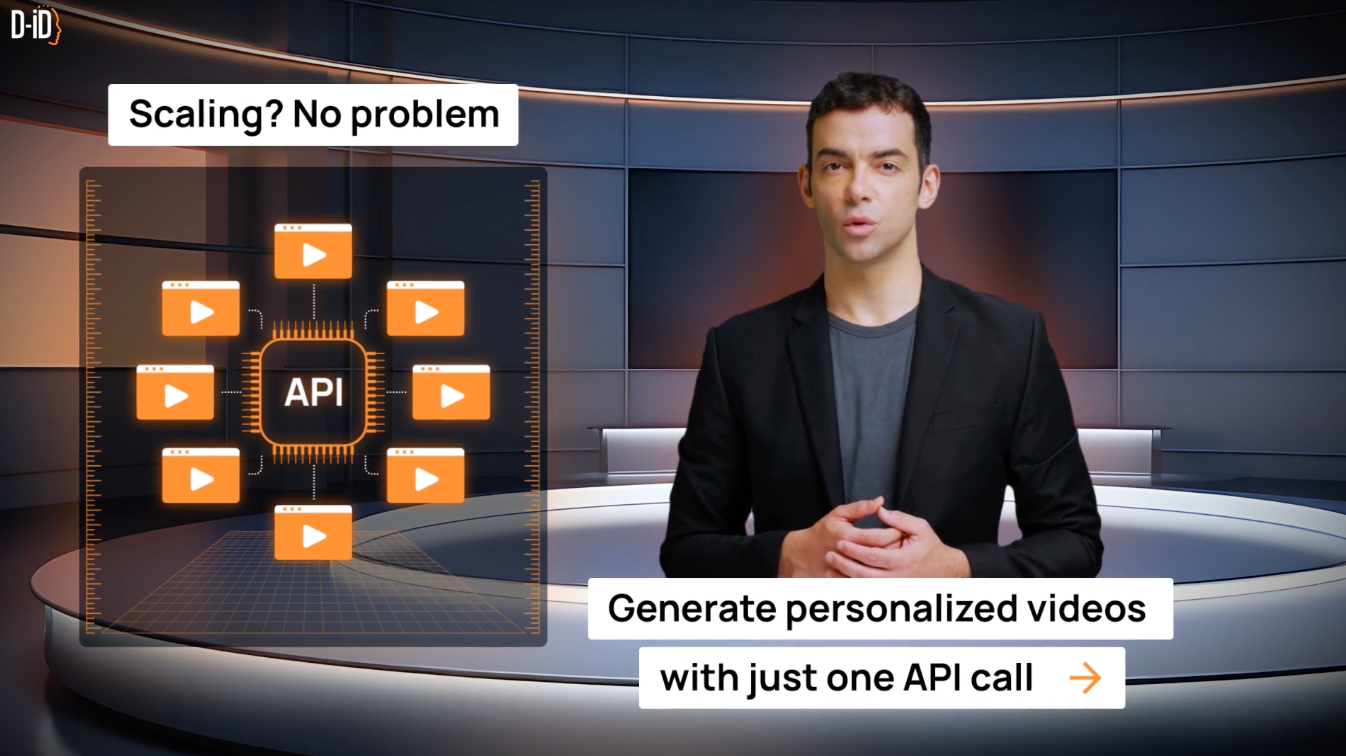

3. D-ID

D-ID is a creative AI platform that brings portraits to life through animated talking-head videos. It supports both UI and API access, enabling users to generate quick, engaging lip-synced videos from still photos using text-to-speech or voice recordings.

Use cases:

- Onboarding and training video creation

- Personalized video campaigns for sales or education

- Social media avatars or virtual presenters

- Quick lip sync video app prototypes

Pros

- Easy to use, even for non-technical users. Simple drag-and-drop interface gets you started in seconds.

- Realistic facial animations and support for multiple languages. Suitable for global campaigns.

- Fast rendering speeds. Generate videos in minutes.

Cons

- Less control over animation details compared to Fabric or custom workflows.

- Branding limitations on free tiers. Watermarks appear unless you upgrade.

Best for: Educators and marketers creating low-effort, scalable avatar content without heavy technical investment. Also works well for startups experimenting with AI video.

Common misconceptions about AI lip syncing

AI lip syncing isn't perfect… yet. While today's AI lip-sync tools deliver impressive results, you may still need to review the outputs and make minor adjustments. The technology excels at creating believable, expressive lip movements, but edge cases (like overlapping dialogue or extreme camera angles) can occasionally trip it up.

It's not just for avatars. AI lip syncing works for real people, dubbed footage, and localization projects. Whether you're creating an AI avatar lip-sync video or syncing audio to your CEO's existing footage, the technology adapts to your content type.

Why AI lip syncing matters now

As demand for multilingual, short-form, and scalable video grows, creators and companies need faster ways to produce synchronized content. Video production timelines are shrinking. Audiences expect polished, professional videos across every platform, from TikTok to LinkedIn to Instagram Stories.

AI removes the manual bottleneck and makes video lip sync scalable, primarily through APIs and auto-generated video platforms. Instead of hiring animators or spending days in post-production, you can generate talking head videos in minutes.

Common mistakes that wreck AI lip sync outputs (and how to avoid them)

The biggest mistake people make with AI lip syncing is assuming it's completely hands-off. While tools like VEED Fabric 1.0 and Lip Sync API automate the complex parts, input quality and creative alignment still play a massive role in achieving believable, expressive results.

Here are the five most common mistakes and exactly how to fix them.

1. Uploading low-quality or mismatched audio

If your audio is muffled, distorted, or poorly paced, AI struggles to generate accurate lip movement. This happens because the model can't identify clear phonemes, which are essential for matching mouth shapes to sounds.

Background noise, echo, or inconsistent volume levels confuse the AI. It might sync to the wrong sounds or produce unnatural mouth movements.

How to fix it:

Record in a quiet space with a quality microphone. Even a decent USB mic makes a considerable difference compared to laptop built-in audio. Use VEED's AI audio tools to clean up audio before generating your lip-sync video. Remove background hum, keyboard clicks, or ambient noise.

Example: A customer used a Zoom recording with echo, leading to unnatural lip movement in Fabric videos. Switching to a studio-recorded script fixed it instantly. The same script, better audio, but with a completely different result.

2. Using unclear or unstyled image/character prompts

VEED Fabric's avatar generation relies on detailed visual prompts. Generic inputs such as "a man" or "a woman" yield bland or inconsistent results. The AI has nothing specific to latch onto, so it defaults to average features.

How to fix it:

Be specific with your character descriptions. Instead of "a man," try "middle-aged man with a beard, glasses, wearing a blue shirt, comic book style." Include style keywords: realistic, claymation, anime, watercolor, 3D render, etc.

Example: Marketers creating spokesperson avatars saw higher engagement after switching from vague to stylized prompt descriptions. "Professional woman" became "confident woman in her 30s, wearing a blazer, realistic style with warm lighting." The second version performed 40% better in A/B tests.

3. Misaligning emotion between voice and visual

A cheerful voice paired with a neutral or serious avatar creates cognitive dissonance for viewers. Your audience immediately notices this mismatch, and it undermines credibility.

The emotional tone needs to match across audio and visual. If your voiceover is enthusiastic, your avatar should smile and show energy.

How to fix it:

Use emotion tags in Fabric, or match the visual tone to the voice energy. Choose upbeat or serious styles accordingly. Preview your video before finalizing. Does the avatar's expression match the energy of the voice? If not, regenerate with adjusted emotion tags.

Example: A client generated an excited customer testimonial but used a static, corporate-looking avatar. The mismatch felt awkward. Regenerating with a smiling, animated style doubled watch time and increased shares by 3x.

4. Skipping the preview and polish stage

Even with significant inputs, minor sync issues or visual glitches can occur. Assuming your video is "done" right after generation is a common oversight.

AI is powerful, but it's not perfect. You might notice slight timing issues, awkward transitions, or moments where the lip sync feels slightly off.

How to fix it:

Always preview your output before publishing. Watch the full video with sound on. Use VEED's editor to trim awkward sections, adjust pacing, or add subtitles. Subtitles boost engagement by 80% and cover any minor sync imperfections.

Example: A brand used raw lip-synced clips in ads and noticed high drop-off at the 3-second mark. Adding subtitles and tightening edit cuts (removing a slow intro) increased click-through rate by 35%.

5. Ignoring platform fit and format

Not all formats work across platforms. A landscape avatar clip may underperform on TikTok or Instagram Reels, where vertical, punchy content is expected.

Each platform has its own video culture. LinkedIn prefers professional, landscape videos. TikTok wants vertical, fast-paced content. Instagram Stories need 9:16 with text overlays.

How to fix it:

Tailor your lip-synced video to the platform. Use square or portrait formats, cut to optimal length, and stylize appropriately. Create platform-specific versions of the duplicate content.

Example: Creators saw a 3x boost in Instagram Reels performance after reformatting avatar videos from 16:9 landscape to 9:16 vertical. The content was identical. Only the format changed.

AI lip syncing vs manual lip syncing

AI lip-syncing automates the process using machine learning, dramatically reducing time and human labor. Upload your audio, generate your video, and get results in under 10 minutes. Meanwhile, manual lip syncing involves frame-by-frame adjustments by an animator or editor. It's more precise for complex animation work, but extremely time-consuming and expensive for most content creators.

Key benefits of AI lip syncing

Here's why businesses and creators are switching to AI lip sync video tools:

- Saves hours of manual editing or animation. What used to take 8+ hours per video now takes 5-10 minutes. That time savings compounds across dozens or hundreds of videos.

- Enables scalable content creation for social media, education, or marketing. Run A/B tests with different spokespersons, create personalized video messages, or spin up localized versions without reshooting.

- Supports translation and localization workflows through dubbing. Replace the audio track in any language while keeping lip movements perfectly synced. No need to reshoot with multilingual talent.

- Enhances accessibility with automated avatar or dubbed narration. Make your content more inclusive by offering multiple language options or by creating sign-language interpreter avatars.

AI lip syncing best practices

Advanced practitioners focus on aligning technical precision with emotional realism. The best AI lip sync workflows incorporate pacing, tone, context, and platform strategy to maximize viewer engagement and authenticity.

Here's how to make lip sync videos that actually perform.

1. Apply emotion tagging strategically

Use Fabric's emotional style tags (like "serious," "excited," "contemplative") based on your script's tone. Matching energy between voice and avatar makes outputs feel more human.

Pro tip: Run A/B tests with different emotion tags on the same audio and compare viewer retention. You'll quickly learn which emotional tones resonate with your audience.

2. Break long scripts into shorter dialogue blocks

Instead of uploading long monologues, segment your dialogue into digestible clips. This improves timing accuracy and makes edits easier. Short clips are also more shareable on social media. Try to keep each segment under 45 seconds for social video formats. Anything longer than that risks a drop-off.

3. Optimize inputs for style and sync

Design your prompts or image uploads with platform aesthetics in mind. Use anime-style avatars for younger audiences on TikTok. Use realistic avatars for LinkedIn or training videos. Match the visual style to where your content will live.

Use Fabric with VEED's voice changer and subtitle generator for complete control over your output.

4. Automate at scale using the API

Integrate Fabric or the Lip Sync API into your CMS or video production workflow to automatically generate hundreds of localized or dynamic videos at scale. This is ideal for e-learning companies or SaaS teams translating training content into multiple languages, so you don’t need to reshoot each version.

Simply dub the audio, and the system will sync lips seamlessly, allowing you to create a single video shoot and produce over 10 perfectly lip-synced language versions.

5. Track engagement metrics post-publication

Measure performance across click-through rate, watch time, and share rate for videos using lip-synced avatars versus static or traditional formats.

KPI targets to aim for:

- 60% video completion rate for social platforms

- 15% increase in multilingual engagement for localized campaigns

- 80% reduction in production time with API automation

Test, measure, iterate. The data will tell you what's working.

Final frame: Make your videos speak

Creating great lip sync is about believability. With VEED’s Fabric and Lip Sync API, anyone can now create realistic, expressive, and synchronized videos at scale. Whether you’re building personalized content, translating for global audiences, or turning avatars into brand reps, these tools give you the flexibility and quality you need.

Ready to make your content speak? Start with Fabric or Lip Sync API and let your voice move your visuals.