Summary / Key Takeaways:

- Kling O1 (Omni One) is the first AI video model that lets you edit existing videos with text prompts—swap objects, add elements, or delete unwanted items without reshooting

- Multi-Elements mode revolutionizes video editing by combining video inputs with text prompts to perform complex edits like object replacement and style changes

- The model uses a Chain of Thought (CoT) approach for text-to-video generation, resulting in better motion accuracy and prompt interpretation

- Kling O1's Multi-Modal Video Engine processes both text and images, enabling precise frame-based video generation with consistent visual quality

- Kling O1 is now available in VEED's AI Playground, giving you access to this powerful model directly in your video editing workflow

You've probably tried AI video generators that just don't get it. You type out a detailed prompt describing exactly what you want—the camera angle, the motion, the mood—and the AI spits out something that barely resembles what you asked for. Sound familiar?

But here's the bigger problem: what happens when you've already shot footage and need to fix or enhance it? Until now, you'd need to reshoot the scene, hire a VFX artist, or accept the imperfection.

That's what Kling AI is solving with their new O1 model. Released on December 1, 2025 Kling O1 isn't just another text-to-video generator—it's a complete video creation and editing system that can modify existing footage using simple text prompts. Want to swap a cat for a wolf in your video? Change someone's outfit? Remove distracting objects? Add flames or other effects? Kling O1 handles it all.

This guide will walk you through everything you need to know about Kling O1—from how it works and what makes it different, to how to actually use it for your video projects. Whether you're creating content for social media, marketing, or just experimenting with AI video generation, you'll learn how to get the best results from this new model.

Want to be a Kling O1 expert? We're creating a detailed prompt engineering and workflow guide specifically for this model. Stay tuned for advanced techniques, prompt templates, and real-world examples that'll help you get professional results every time.

What is Kling AI O1?

One reasoning model with multi-modal capabilities

Release Date: December 1, 2025

Key Features:

- Multi-Elements mode for video-to-video editing—swap, add, delete, or restyle elements in existing footage

- Chain of Thought (CoT) reasoning system that analyzes prompts before generating video

- Multi-Modal Video Engine that processes both text prompts, video and image inputs for enhanced control

- Support for 5-second and 10-second video generation with aspect ratios up to 16:9

- Professional and Standard quality modes for different use cases

- Enhanced motion accuracy and camera control compared to previous Kling models

Most AI video tools make you choose: do you want to generate something new, or edit what you have? Need to add an object? That's a different tool. Want to change the style? Switch modes again. Kling O1 takes a different approach.

Instead of jumping between separate tools for creation, editing, and refinement, Kling O1 gives you three integrated workflows that work together. You can generate a video from scratch, then immediately edit it. Or start with existing footage and transform it completely. The Multi-Modal Video Engine understands text descriptions, visual references, and video content simultaneously—letting you work like a director who can iterate on a vision without switching between different specialized tools.

Here's what Kling O1 actually does:

Multi-Elements (Video-to-Video Editing): Upload existing footage and modify it with text prompts. Swap objects (replace a cat with a dog), add new elements (insert flames or effects), delete unwanted items (remove background distractions), or restyle the entire aesthetic (convert realistic footage to anime style). This is video editing powered by AI reasoning—no masking, no tracking, just describe what you want changed.

Text-to-Video Generation: Create videos from detailed text descriptions. O1 uses a reasoning process to understand your prompt before generating, which means better motion accuracy, consistent subjects across frames, and camera movements that actually follow your instructions. You get what you asked for on the first try more often.

Image-to-Video (Frame Mode): Upload start and end frame images to define exactly where your video begins and ends. The Multi-Modal Video Engine generates smooth motion and transitions between your frames, maintaining visual consistency throughout. Perfect when you need precise control over composition and branding.

The breakthrough here isn't just that O1 can do all three—it's that they work together in one continuous workflow. Generate a base video with text-to-video, then jump into Multi-Elements to refine specific details. Or start with frame mode for precise composition, then use Multi-Elements to adjust elements you want to change. You're not switching tools; you're iterating on a vision.

This unified approach comes from Kling O1's Multi-Modal Video Engine—an architecture built to process text, images, and video simultaneously. It doesn't just understand what things look like; it understands how they move, how they should interact, and how changes should behave across time. That's what makes sophisticated operations like object swapping or style transformations possible while maintaining consistency across all frames.

Availability: Kling O1 is now available in VEED's AI Playground, allowing you to generate and edit AI videos directly within your video editing workflow. Try Kling O1 on VEED!

Pricing: Kling reports 35 credits for 5-second professional mode, 70 credits for 10-second professional mode

Multi-Elements: The game-changer for video editing

Edit existing footage with text prompts—no VFX skills required

This is where Kling O1 gets revolutionary. Multi-Elements mode lets you upload existing video and modify specific elements using nothing but text prompts. Think of it as Photoshop's content-aware tools, but for video, and powered by AI reasoning.

Here's what makes Multi-Elements different from traditional video editing or even other AI video tools:

You don't need to reshoot footage. Recorded a video but want to change a prop? Traditional workflow: reshoot everything. Kling O1 workflow: upload the video, describe what you want to change, done.

You don't need VFX expertise or masking. Normally, swapping objects in video requires frame-by-frame masking, tracking, and compositing. Kling O1 handles all of this automatically through its Multi-Modal Video Engine—you just describe the change you want.

You can transform video aesthetics instantly. Want to change your video's style from realistic to animated? From modern to cyberpunk? From day to night? Multi-Elements can restyle your entire video while maintaining the original motion and composition.

What you can do with Multi-Elements

Swap: Replace any element in your video with something else. Turn a cat into a wolf. Change someone's outfit. Replace a boring background object with something more interesting. The model understands what you want to replace and handles the substitution naturally across all frames.

Add: Insert new elements into your existing video. Add flames to a scene. Place objects in someone's hands. Introduce animated elements that interact with your footage. O1 reasons through how these additions should behave in the context of your video's motion and lighting.

Delete: Remove unwanted objects from your footage. Clean up distracting background elements. Eliminate props that didn't work. The model fills in the space naturally, maintaining continuity across frames.

Restyle: Transform your video's entire aesthetic. Convert realistic footage to cartoon style. Apply wool felt, claymation, or anime aesthetics. Change the mood from bright and cheerful to dark and moody. This is like applying an Instagram filter, but with AI understanding of how styles should behave across motion and time.

How Multi-Elements works

The process is straightforward:

- Upload your existing video (MP4/MOV files, up to 100MB, maximum 10 seconds, 720P/1080P resolution)

- Optionally upload a reference image showing what you want to add or swap

- Write a text prompt describing the edit (e.g., "swap [cat] from [@image] for [dog] from [@Video]")

- Let O1's Multi-Modal Video Engine process your request

- Download your edited video or continue editing in VEED's video editor

The Multi-Modal Video Engine analyzes your original video, understands the motion dynamics, lighting conditions, and spatial relationships, then applies your requested changes while maintaining consistency across all frames. This is significantly more sophisticated than simple frame-by-frame processing—O1 reasons through how changes should behave over time.

Real-world use cases for Multi-Elements

- For content creators: Fix wardrobe issues or props without reshooting. Add visual effects to make content more engaging. Experiment with different aesthetics to see what resonates with your audience.

- For marketers: Update product colors or designs in existing footage. Remove competitor logos or distracting elements. Add seasonal elements (snow, leaves, decorations) to evergreen content.

- For filmmakers: Remove unwanted objects that snuck into shots. Add atmospheric effects like fog or lighting. Experiment with different visual styles in post-production.

- For social media managers: Quickly adapt existing content for different platforms or trends. Add trending elements to older footage. Create variations of successful content without full re-production.

How Kling O1's Multi-Modal Video Engine works

Understanding multi-modal video generation

When you submit a request to Kling O1—whether that's generating new video or editing existing footage—the Multi-Modal Video Engine processes multiple types of information simultaneously.

For Multi-Elements editing, this means:

- Analyzing your source video frame-by-frame to understand motion, objects, lighting, and composition

- Processing your text prompt to understand what changes you want

- Examining reference images (if provided) to understand visual targets

- Reasoning through how these changes should be applied across time while maintaining consistency

For text-to-video generation, the reasoning process works like this:

Let's say you prompt: "A woman in a red dress walks through a park at sunset, camera follows her from behind." A typical AI video model might generate a woman, some park-like scenery, and motion that vaguely resembles walking. But O1's multi-modal reasoning breaks this down: subject (woman), clothing (red dress), action (walking), environment (park), lighting (sunset), camera movement (following from behind). It reasons through how these elements interact—the dress should move naturally as she walks, the sunset lighting should affect the colors and shadows, the camera should maintain consistent distance and angle.

This reasoning step adds processing time but dramatically improves output quality. You'll notice it most in motion consistency. Characters don't morph between frames. Objects maintain their properties. Camera movements flow smoothly rather than jumping around.

How multi-modal input improves video quality

The Multi-Modal Video Engine gives you precision that single-input models can't match. When you upload reference images in frame mode or Multi-Elements mode, O1 analyzes the visual characteristics—not just what objects are present, but how they're styled, lit, composed, and positioned. This visual information guides the entire generation or editing process.

This is especially powerful for brand work or projects requiring visual consistency. Upload an image of your product with exact lighting and positioning, add a text prompt describing the motion or changes you want, and O1's multi-modal processing ensures the output maintains your visual standards.

Motion dynamics and camera control

Kling O1 shines when handling complex motion. The model understands physics better than previous versions, which means objects move in ways that look natural. A ball bounces with realistic weight. Water flows according to gravity. People's clothing and hair respond to movement.

For Multi-Elements editing, this physics understanding is crucial. When you swap one object for another, O1 doesn't just replace pixels—it understands how the new object should move, cast shadows, and interact with its environment based on the motion in your original footage.

Camera control is where many AI video models struggle, but O1 gives you precise options in text-to-video mode. You can specify camera movements like pans, tilts, zooms, and tracking shots. The model reasons through how these movements should work in relation to your subject and environment. When you ask for a slow zoom out, you get exactly that—not a jittery approximation.

The frame-mode option takes this even further by letting you define exact start and end frames. This gives you cinematic control over your shots in ways that feel more like working with a professional camera operator than an AI tool.

Kling O1 vs Kling 1.6: What's actually different?

Performance improvements you'll notice

The jump from Kling 1.6 to O1 isn't just a version bump—it's a fundamental change in approach. Kling 1.6 was already competitive, but O1 addresses the main complaints users had with earlier versions.

Multi-modal capabilities represent the biggest architectural change. While Kling 1.6 worked primarily with text prompts, O1's Multi-Modal Video Engine can process both text and images together. This multi-modal approach is what enables the enhanced frame mode and dramatically better prompt accuracy.

Prompt accuracy has seen significant improvement. With Kling 1.6, you might need to regenerate your video multiple times to get something close to your vision. O1's reasoning system means your first generation is more likely to match your prompt. This saves both time and credits.

Subject consistency across frames is noticeably better. Characters maintain their facial features and clothing details throughout the video. Objects don't mysteriously change color or shape. This consistency is crucial for any video where you need to maintain a specific brand look or character appearance.

Motion quality shows clear refinement. Actions that looked slightly off in 1.6—like walking gaits or hand movements—appear more natural in O1. The model understands how different types of motion should work, from fast action to slow, deliberate movements.

Camera movements are smoother and more predictable. If you've struggled with jerky pans or inconsistent tracking shots in previous versions, O1 addresses these issues through better reasoning about spatial relationships and movement dynamics.

When to use O1 vs other Kling models

You'll want to reach for Kling O1 when your project demands accuracy and consistency. Here's when it makes sense:

Complex motion scenarios: Multiple subjects moving independently, interactions between objects, or intricate camera work all benefit from O1's reasoning capabilities.

Multi-modal projects: Anytime you need to combine text descriptions with specific visual references, O1's Multi-Modal Video Engine is your best option.

Professional projects: When you need output that's client-ready or publication-quality, the enhanced consistency and accuracy justify the slightly higher credit cost.

Precise prompt requirements: If you have a specific vision and need the AI to nail it (rather than giving you creative variations), O1's prompt understanding will save you regeneration credits in the long run.

Stick with Kling 1.6 or other models for quick experiments, simple scenes, or when you're exploring creative directions rather than executing a specific vision. The credit difference matters less when you know exactly what you need.

How to use Kling O1 for video generation

1. Getting started with text-to-video

For simple video creation

🥇Best Choice: Text-to-video mode with professional quality

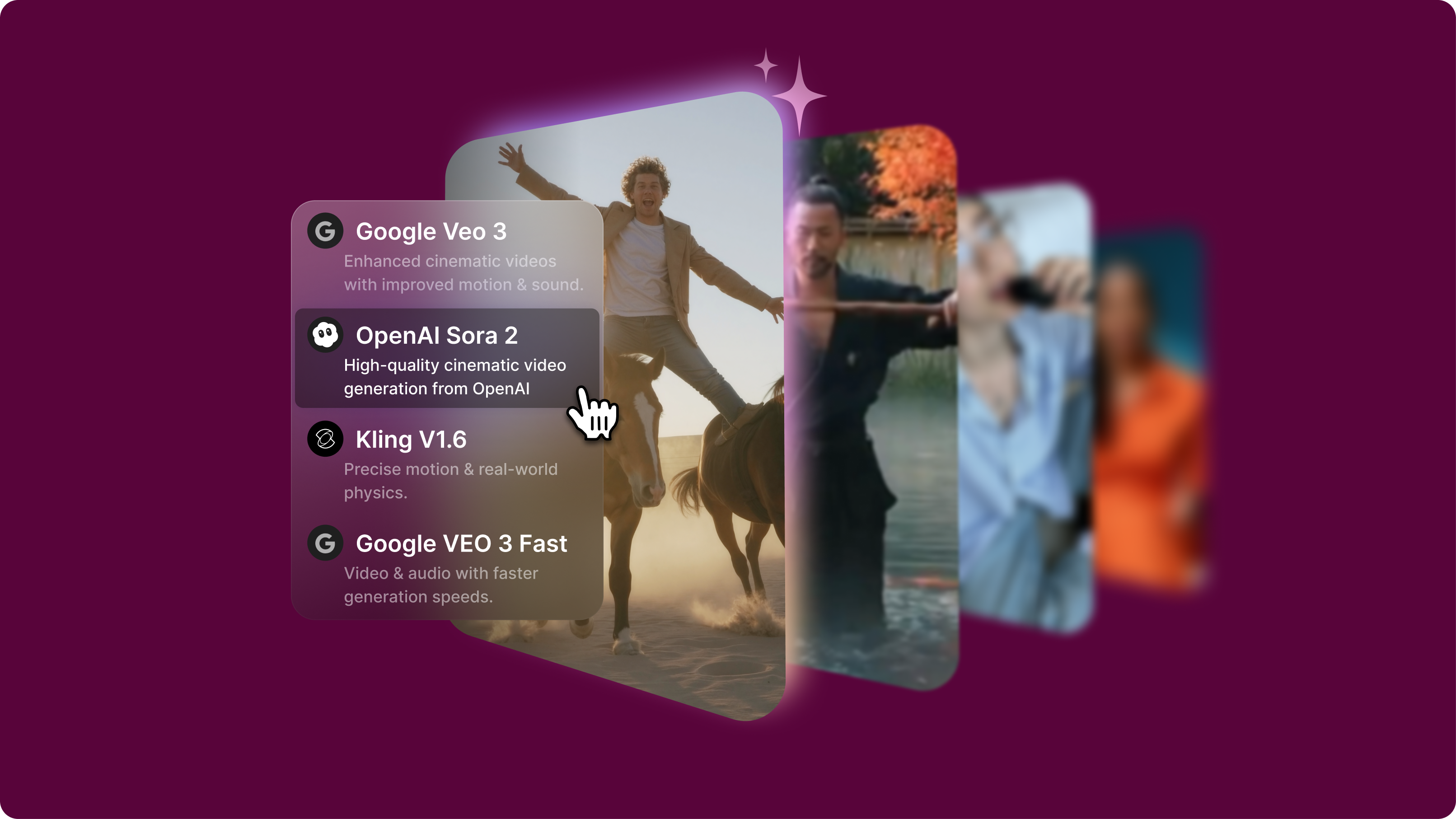

Text-to-video is your starting point for most Kling O1 projects. Navigate to VEED's AI Playground and select "Video O1" from the model options. You'll see a simple prompt box—this is where the magic happens.

Start by writing a clear, detailed prompt that describes your desired video. The more specific you are about subjects, actions, environment, and camera movement, the better O1 can reason through your request. Instead of "a cat playing," try "an orange tabby cat batting at a dangling toy mouse, shot from above with soft natural lighting."

Choose your duration (5 or 10 seconds) based on your needs. Five seconds works well for social media content, product demos, or scenes with simple actions. Ten seconds gives you room for more complex sequences or smoother transitions. Keep in mind that 10-second generations cost double the credits.

Select professional mode if quality matters for your project. The difference shows up in detail retention, motion smoothness, and overall polish. Standard mode works fine for tests or content where slight quality trade-offs are acceptable.

🥈Runner-up: Standard mode for testing prompts

When you're still figuring out the right prompt or exploring creative directions, standard mode lets you iterate without burning through credits. Once you've dialed in your prompt, switch to professional mode for your final generation.

2. Working with frame mode for multi-modal precision

Setting up your start and end frames

Image requirements: Upload images as PNG or JPG files, maximum 10MB each

Frame mode showcases Kling O1's multi-modal capabilities by letting you define exactly where your video starts and ends. This is powerful for storytelling, product reveals, or any scenario where you need precise visual outcomes.

Navigate to frame mode and you'll see options to upload both a start frame and end frame. These images become anchors—O1's Multi-Modal Video Engine will generate the motion and transitions between them while maintaining the visual elements you've defined.

For your start frame, upload an image that represents exactly how you want your video to begin. This could be a product shot, a character pose, or an environment establishing shot. Make sure the image is high quality because O1 will work to maintain those details throughout the generation.

Your end frame should show where you want the video to finish. The Multi-Modal Video Engine will reason through how to transition from start to end, considering motion paths, camera movement, and any transformations that need to happen between these two points.

Prompt strategy: Describe the motion and transition between frames, not the frames themselves

Your prompt in frame mode should focus on how subjects move and how the camera behaves during the transition. "Camera slowly zooms in while maintaining focus on the product" or "character walks from background to foreground, turning to face camera." O1's multi-modal processing uses your frames for visual reference and your prompt for motion direction.

Quality settings: Professional mode recommended for frame-based projects

Frame mode benefits significantly from professional quality settings. The Multi-Modal Video Engine needs to maintain consistency with your uploaded images while generating smooth motion—professional mode gives it the resolution and processing to do this well.

3. Optimizing your prompts for better results

Structure that works with O1's reasoning

- Be specific about subjects: Instead of "a person," describe "a woman in her 30s with dark hair wearing a blue jacket"

- Define the action clearly: "walks briskly toward camera" beats "moves forward"

- Specify camera behavior: Include phrases like "static shot," "slow pan right," or "tracking shot following subject"

- Describe lighting conditions: "golden hour sunlight," "dramatic side lighting," or "soft overcast light"

- Set the mood: Add emotional context like "energetic," "contemplative," or "mysterious"

O1's reasoning system works best when you give it concrete details to work with. Think of your prompt as instructions for a director of photography—what would you tell them to capture your vision?

Common prompt patterns that deliver results

Here are proven prompt structures that work well with Kling O1:

For character actions: "[Character description] [action] in [environment], [lighting condition], [camera movement]"

Example: "A young chef in white uniform flips vegetables in a wok in a modern kitchen, warm afternoon light through windows, slow zoom out from close-up"

For product reveals: "[Product description] [revealing action], [background/setting], [lighting], [camera behavior]"

Example: "A sleek silver smartphone rotates slowly on a marble surface, studio lighting with soft shadows, camera circles around product"

For landscape/environment: "[Camera movement] revealing [environment description], [time of day], [mood]"

Example: "Aerial camera rises above misty mountain valleys, early morning golden light, serene and majestic"

Pro tip: We're putting together a comprehensive prompt engineering guide for Kling O1 that includes 50+ tested prompt templates, multi-modal workflow examples, and troubleshooting strategies. Check back soon for the complete guide.

Choosing the right mode for your project

When to use Multi-Elements vs text-to-video vs frame mode

Use Multi-Elements when:

- You have existing footage that needs modification

- You want to fix or enhance recorded content without reshooting

- You need to swap, add, or delete specific elements

- You want to experiment with different visual styles on the same footage

- You're working with client footage that needs adjustments

Use text-to-video when:

- You're creating content from scratch

- You have a clear vision but no existing footage

- You want to generate multiple creative variations quickly

- You're exploring concepts before committing to production

Use frame mode when:

- You need precise control over start and end points

- You're working with brand guidelines that require specific visuals

- You want to create product animations with exact compositions

- You have design mockups that need to be brought to life with motion

Duration and quality decisions

- 5-second videos: Best for social media posts, quick product showcases, simple edits, or when you're batch-generating multiple clips

- 10-second videos: Ideal for narrative sequences, complex edits, character interactions, or when you need smoother transitions

Professional mode delivers higher resolution, better detail retention, smoother motion interpolation, and more consistent lighting. Use it for client work, final deliverables, or any video that represents your brand.

Standard mode offers faster generation times, lower credit costs, and quality that's perfectly acceptable for testing, internal reviews, or platforms where compression will reduce quality anyway.

Aspect ratio considerations

Kling O1 supports various aspect ratios up to 16:9. Choose based on your distribution platform—16:9 for YouTube and traditional video platforms, 9:16 for vertical social media content like Instagram Stories or TikTok, and 1:1 for square posts that work across multiple platforms.

Best practices for getting the most from Kling O1

Prompt engineering strategies

- Front-load the most important information: Place critical details about subjects and actions at the beginning of your prompt where the model gives them the most weight

- Use consistent terminology: If you call something a "jacket" in one part of your prompt, don't switch to "coat" later—consistency helps O1's reasoning

- Separate concerns with commas: Structure your prompt with clear separators: "Subject and action, environment and setting, lighting conditions, camera behavior"

- Test variations systematically: Change one element at a time when refining prompts so you understand what produces different results

- Build a prompt library: Save prompts that work well for different scenarios—you'll develop patterns that consistently deliver for your style

Maximizing multi-modal capabilities

- Create high-quality reference images: When using frame mode, the quality of your input images directly affects output quality

- Match lighting between frames: If your start and end frames have different lighting, O1's Multi-Modal Video Engine might struggle with the transition

- Consider composition consistency: Dramatic changes in composition between frames can produce interesting results, but subtle shifts often look more natural

- Use frame mode for brand consistency: Upload images that match your brand guidelines to ensure AI-generated content maintains your visual identity

Real-world use cases for Kling O1

Here's what to remember about using Kling O1 for your video projects:

- Multi-Elements revolutionizes video editing: Edit existing footage with text prompts—swap objects, add elements, delete unwanted items, or restyle aesthetics without VFX expertise or reshooting

- O1's Multi-Modal Video Engine processes text, images, and video: This multi-modal approach gives you unprecedented control whether you're generating new content or editing existing footage

- Three modes for different workflows: Multi-Elements for editing, text-to-video for generation, frame mode for precise image-to-video transitions

- VEED integration streamlines your workflow: Starting Monday, December 2nd, generate, edit, and enhance all in one platform

- Professional mode is worth it for final deliverables: The quality difference shows up in client work, branded content, and any video that represents your public image

🔧 Next Step: Try Kling O1 in VEED's AI Playground to experience how multi-modal video generation differs from traditional AI video tools. Start with something specific like "a coffee cup being filled, steam rising, morning light from window" and compare the motion quality to what you'd get elsewhere.